Disclaimer!

I’m not an expert, computer graphics is my hobby. When I started to learn directx 12 I already was quite comfortable with directx 11 but it was still difficult to switch. And even after several months of investigations I still have a feeling that I just scratched a surface. I’m constantly learning and this post is a synchronization of my thoughts. I found that attempts to explain complex things makes me understand these things better. Thought the article was written by me for me I hope you’ll find it useful too.

Directx 12 is low level, it has many concepts and in order to make your code work well you need to take into account a lot of things. You need to profile a lot. And you need to know hardware. For example you need to know that changing descriptor heaps is a heavy operation. I have no idea what’s happening in hardware and why it’s expensive. I’m just following guidelines trying to remember and reading gpu specs in parallel.

Also I assume that you have an experience with previous directx versions because I’ll not explain in this post what is swapchain or backbuffer. You should be familar with tesselation - what are tesselation factors, what is a constant function, why do you need hull and domain shaders. Good overview of directx 11 tesselation can be found here. Also you need to know basic windows programming because we need to create a window and I’ll not explain how to do it.

Preface

In this tutorial we’ll render a teapot. But not just a static mesh, no. We’ll render a tesselated teapot. Why did I choose this? Well, because you can find in the web a plenty of different HelloWorld examples. I didn’t want to create another HelloWorld but something that covers different areas of the api and at the same time is simple.

We’re going to use 16-point patches for the teapot. We’ll provide control points positions in one vertex buffer and patch indicies in one index buffer. For colors and transforms (more on this later) we’ll use structured buffers.

Usually directx tutorials follow the same pattern - initialization, resource creation, rendering. I decided to go slightly different way - first we’ll create the most important part and later will add different components to support it - one after another as required. And the most important part, by my opinion, are shaders. After all this is what we want the gpu to execute. This approach helped me to tie different parts of the api and undesrtand how they related to each other.

In order to write a shader we need to figure out what data it operates on. So let’s first define it.

Teapot Data

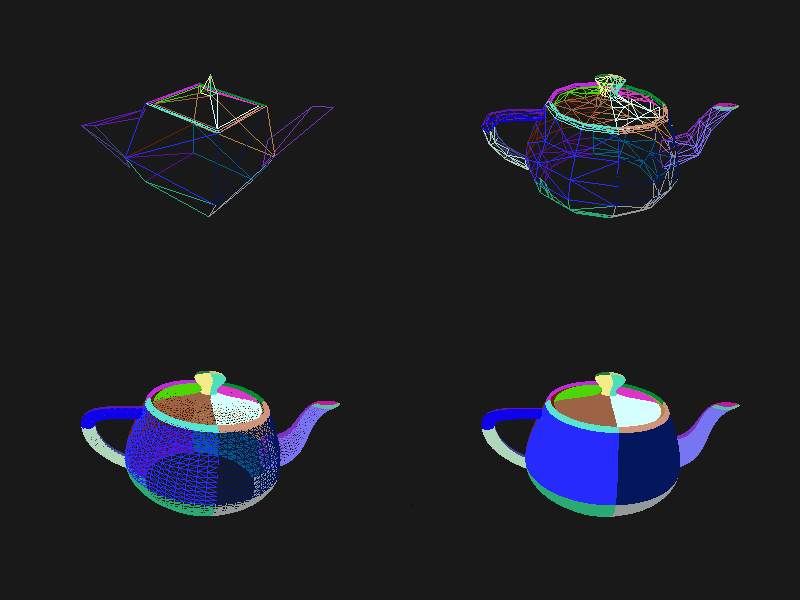

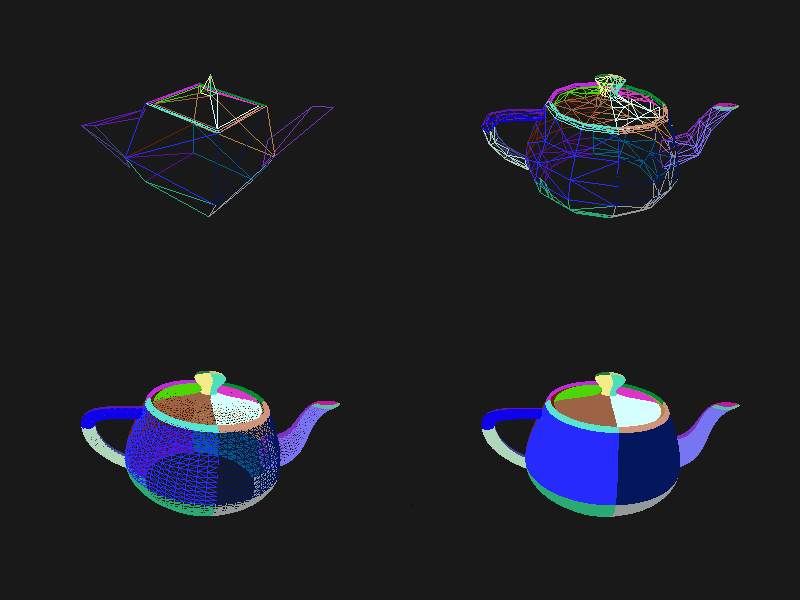

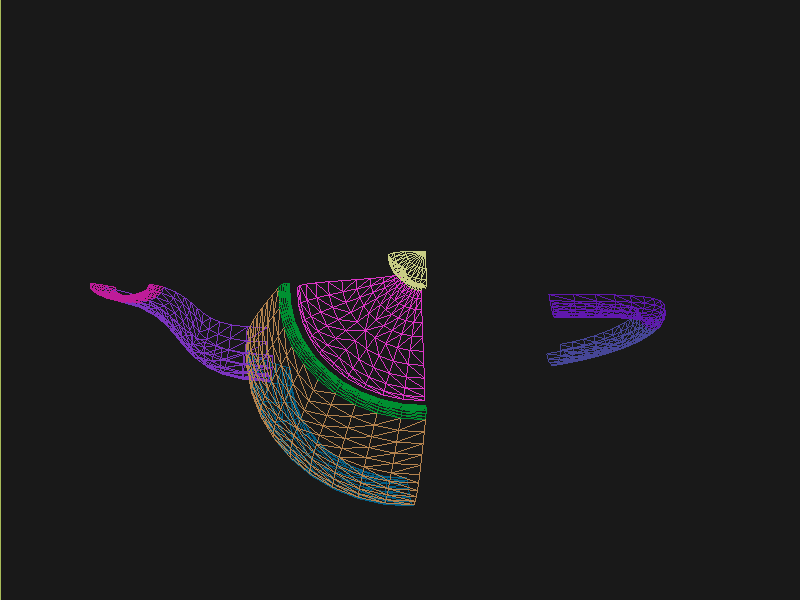

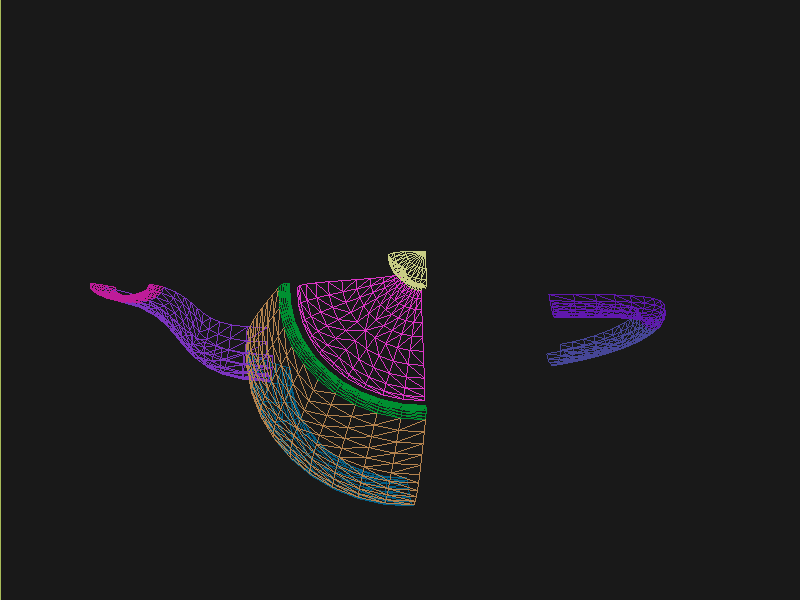

Since we’re going to use tesselation we’re not interested in “usual” mesh made of triangles. We need patches. Of course somebody already did this for us and we can use ready data. For example here is the set of 16-point patches that describe a teapot. Unfortunatelly we can’t use this data as is but we need to adjust it a little. This set doesn’t have a bottom - it’s ok, we’ll not use it either but existing patches describe only parts of the teapot. For example rim, body and lid describe only a quarter of a teapot and handle and spout describe only half of respective parts. So if we’ll render this set we’ll get this:

There’re several ways to fix it. One way is to use separate draw call for every part. This way we can render rim four times with different transformations. Another way is to draw a part once but use instancing. This way we’ll have a draw call for every part’s family (rim, body etc.) but the repeated parts will be instances.

We’ll go other way and render everything in one draw call. For this we’ll duplicate indices required number of times and also provide a transformation for every part. Let’s take a rim as an example. Instead of having one patch for the quarter we’ll have 4 patches for the entire circle, that means 16 * 4 = 64 indices for this part. In the shader knowing the patch id we can apply a transform. In our case we’ll rotate initial patch around an axis by 0, 90, 180 and 270 degrees. All that means that together with points positions and indices we need to provide a transformation data as. Additionally to visually separate patches we’ll use different colors which also should be provided as a separate data. In total we’ll have 28 patches and our data will consist of list of points (some points are shared between patches - that’s why we need indices), list of indices (28 * 16), list of transforms (28 matrices) and list of colors (28 randomly generated rgb colors). Final data can be found here.

Shaders

In our example we’ll use vertex, hull, domain and pixel shaders.

Vertex shader

The first shader in our pipeline is the vertex shader. All it’s do is accepts control point position from the application and passes it to the hull shader. Because of its simplicity I’ll not provide it here but you can observe it in github.

Just to remind you - the vertex shader will be called once for every control point in the patch. For 28 patches (recall that this is the number of patches used for the model) 16 points each this is 448 times.

Hull shader

This shader, as you already know, receives control point position from the vertex shader and also some data from the application in the form of constants which we’ll use as tesseltion factors for edge and inside of the patch.

#define NUM_CONTROL_POINTS 16

struct PatchTesselationFactors

{

int edge;

int inside;

};

ConstantBuffer<PatchTesselationFactors> tessFactors : register(b0);

struct VertexToHull

{

float3 pos : POSITION;

};

struct PatchConstantData

{

float edgeTessFactor[4] : SV_TessFactor;

float insideTessFactor[2] : SV_InsideTessFactor;

};

struct HullToDomain

{

float3 pos : POSITION;

};

PatchConstantData calculatePatchConstants()

{

PatchConstantData output;

output.edgeTessFactor[0] = tessFactors.edge;

output.edgeTessFactor[1] = tessFactors.edge;

output.edgeTessFactor[2] = tessFactors.edge;

output.edgeTessFactor[3] = tessFactors.edge;

output.insideTessFactor[0] = tessFactors.inside;

output.insideTessFactor[1] = tessFactors.inside;

return output;

}

[domain("quad")]

[partitioning("integer")]

[outputtopology("triangle_cw")]

[outputcontrolpoints(NUM_CONTROL_POINTS)]

[patchconstantfunc("calculatePatchConstants")]

HullToDomain main(InputPatch<VertexToHull, NUM_CONTROL_POINTS> input, uint i : SV_OutputControlPointID)

{

HullToDomain output;

output.pos = input[i].pos;

return output;

}Here you can see that the patch outputs the same 16 control points, uses integer partitioning and quad domain. Also note the new hlsl 5.1 syntax for the constant buffer:

ConstantBuffer<PatchTesselationFactors> tessFactors : register(b0);Thought you can use the old syntax I like the new one more. Beyond this the shader is a simple pass-through, like a vertex shader.

This shader will be invoked 28 number of times (by the number of patches).

Domain shader

Finally we arrived to the point of interest. Basically this is the place where all the work is done in our program.

#define NUM_CONTROL_POINTS 16

struct ConstantBufferPerObj

{

row_major float4x4 wvpMat;

};

ConstantBuffer<ConstantBufferPerObj> constPerObject : register(b0);

struct PatchTransform

{

row_major float4x4 transform;

};

StructuredBuffer<PatchTransform> patchTransforms : register(t0);

struct PatchColor

{

float3 color;

};

StructuredBuffer<PatchColor> patchColors : register(t1);

struct PatchConstantData

{

float edgeTessFactor[4] : SV_TessFactor;

float insideTessFactor[2] : SV_InsideTessFactor;

};

struct HullToDomain

{

float3 pos : POSITION;

};

struct DomainToPixel

{

float4 pos : SV_POSITION;

float3 color : COLOR;

};

float4 bernsteinBasis(float t)

{

float invT = 1.0f - t;

return float4(invT * invT * invT, // (1-t)3

3.0f * t * invT * invT, // 3t(1-t)2

3.0f * t * t * invT, // 3t2(1-t)

t * t * t); // t3

}

float3 evaluateBezier(const OutputPatch<HullToDomain, NUM_CONTROL_POINTS> bezpatch, float4 basisU, float4 basisV)

{

float3 value = float3(0, 0, 0);

value = basisV.x * (bezpatch[0].pos * basisU.x + bezpatch[1].pos * basisU.y + bezpatch[2].pos * basisU.z + bezpatch[3].pos * basisU.w);

value += basisV.y * (bezpatch[4].pos * basisU.x + bezpatch[5].pos * basisU.y + bezpatch[6].pos * basisU.z + bezpatch[7].pos * basisU.w);

value += basisV.z * (bezpatch[8].pos * basisU.x + bezpatch[9].pos * basisU.y + bezpatch[10].pos * basisU.z + bezpatch[11].pos * basisU.w);

value += basisV.w * (bezpatch[12].pos * basisU.x + bezpatch[13].pos * basisU.y + bezpatch[14].pos * basisU.z + bezpatch[15].pos * basisU.w);

return value;

}

[domain("quad")]

DomainToPixel main(PatchConstantData input, float2 domain : SV_DomainLocation, const OutputPatch<HullToDomain, NUM_CONTROL_POINTS> patch, uint patchID : SV_PrimitiveID)

{

// Evaluate the basis functions at (u, v)

float4 basisU = bernsteinBasis(domain.x);

float4 basisV = bernsteinBasis(domain.y);

// Evaluate the surface position for this vertex

float3 localPos = evaluateBezier(patch, basisU, basisV);

float4x4 transform = patchTransforms[patchID].transform;

float4 localPosTransformed = mul(float4(localPos, 1.0f), transform);

DomainToPixel output;

output.pos = mul(localPosTransformed, constPerObject.wvpMat);

output.color = patchColors[patchID].color;

return output;

}Going from the top we see that we’re operating on the same 16 point patch, we have a constant buffer for the teapot`s world-view-projection transform, structured buffer for the patch transform and structured buffer for the patch color. In practice we can use one structured buffer for both transforms and colors but I deliberately split it in two to show how we can assign resources through the root table (more on this later). This data we’re receiving from the application.

There’re also structs: PatchConstantData and HullToDomain - data from the hull shader (remember that position is a pass through from the vertex shader which also passes it from the input assembler), DomainToPixel - the data we’re passing further down the pipeline - to the pixel shader.

Next is a pure math - in the main() function we have a list of control points for one patch (16 points) and we need to sample it so we can assign a position to the new vertex generated by tesselator. The good overview of the math behind you can find here. Also this presentation is a very good reading about patch tesselation in directx 11 (I took the most of the code from there to be honest).

So what are we doing in the main() function? The first 3 function parameters are pretty standard - the constant data which we defined in the hull shader (not used here, but have to be provided), uv coordinates for our point in the quad domain - generated by tesselator, and initial patch information from the hull shader. The last parameter - PatchID with special semantics is worth paying litle attention. As you remember, in our demo we have the total number of patches equal to 28 and we want to apply some parameters to each patch, for example a color. That means that for every generated vertex in the same patch we need to assign the same color information and pass it to the pixel shader. And this is where SV_PrimitiveID semantics will come to the rescue - for every vertex of the same patch (no matter how many vertices were generated) this value will be the same. The first patch will get id of 0, second patch - 1 and so on. One thing worth to remember - all patches should be rendered in one draw call. Every new draw call resets the id (as well as new instance in instance drawing).

First we’re finding the vertex position in patch space. Next with the help of the patch id we’re obtaining th patch transform (recall an example - we need to rotate a rim 4 times) and applying it to the vertex. Next we’re transforming the vertex to the homogenious space by multiplying it on world-view-projection matrix. In the final step we’re sampling the color structured buffer and sending this data to our last programmable stage - pixel shader.

This function will be called for every generated vertex (generated by tesselator). The number of generated vertices depends on the tesselation factors (edge and inside for the quad patch) and partitioning scheme ([partitioning("integer")] in the hull shader).

Pixel shader

This is also a very simple shader, don’t even need to be discussed. You can find the code here.

That’s basically it - we have a program and we need to make our hardware to run it. All other code just exist for this purpose - to help the gpu execute shaders. To summarize things I drew a diagram that shows shader stages and resources we need.

Couple of things to note. Resources are stored in gpu memory. Gpu have no idea what’s stored inside it and how to interpret it - it’s just a blob of data. It’s our task to tell it where the data resides, the size and the format. For vertex buffer and index buffer it’s pretty easy - we’re creating these buffers and later tell the gpu to use it with ID3D12GraphicsCommandList::IASetVertexBuffers() and ID3D12GraphicsCommandList::IASetIndexBuffer() methods. On the diagram I showed solid a arrow from input to these resources. With other resources things are different. There’s no such method like DSSetStructuredBufferInSlot() or similar and we need to use a special structure called RootSignature to bind shaders and resources together. That’s why there’re question marks between shader and resource. We’ll find out how to bind resources in the next sections. Also on the diagram I specified the size of our data together with alignment size (for example 1416B / 64kB for the vertex buffer). Id directx 12 (and 11) buffers should be aligned by 64kB. We can specify this value during resource creation or let the api do it for us. That means if we have a lot of small buffers we’re wasting a lot of space. But it’s just an interesting point and we shoudn’t bother about this in our example.

Briefly about Descriptors

As I mentioned above gpu can’t use resource memory directly. How can we say then that some memory is a structured buffer, for example? As you already guessed - with a descriptor (another name is view). This is a small structure that describes the resource - it’s format, size etc. Since this information used by gpu it’s convenient to strore it in the gpu itself. We keep descriptors in special place called descriptor heap. We’ll touch descriptors more closely in later sections but for now you just need to remember that resource stored in memory is just a bunch on bits and bytes. This bunch can be described with descriptors - lightweight data that tells gpu how to interpret particular part of memory. This descriptors are stored in gpu memory in descriptor heaps. Of course directx wouldn’t be directx if everything would be so easy - there different ways to provide information to the gpu, for example we can bypass descriptor heap and pass descriptor directly or avoid desciptor at all! We’ll cover this options in the course of this article.

Code Organization

When I started to write this tutorial I wanted to make it as simple as possible and put everything in one file. But when this file became more than 1000 lines I decided to split the code on several logic units. Window is a class which encapsulates window creation and accepts a key press callback in the form of std::function. We’ll use this callback to change demo parameters. Graphics is a base class for our demo. It creates a Window and also initializes d3d. For example it creates device, swap chain, depth buffer, back buffers, command list and so on. TeapotTutorial extends this class and adds functionality related to our demo - resources creation, rendering. I’ll describe why each method exist and we’ll start with creation of the root signature.

Root Signature

At this point we should know that shaders require resources and this resources should be bound to the correct resource slots (b0 for constant buffer, t0 for structured buffer, for example). In directx 12 we bind with special interface - ID3D12RootSignature. With the help of the interface we can describe which resources a shader needs and in which slot. We can say that signature only declares input parameters, just like usual c++ function signature. For example:

void rootSignature(std::array<int, 2> constants, XMFLOAT4X4* wvpMatrix, std::vector<XMFLOAT3*>* colors);What we see here is that our function expects 3 parameters - two ints, copied by value; pointer to a matrix, and a pointer to vector of pointers to some colors. This is what happening if we’re using these values: the first parameter - two ints - will be copied to registers so accessing them will be extremely fast; for the second parameter we need to dereference a pointer and it will lead to memory read with a potential cache miss, so it’s slower that the first parameter; the third parameter is the slowest one - to read from the vector we need to dereference it first and then dereference the element we want to access - that means two indirections.

Please notice that this is just a signature - it doesn’t tell us what are the actual parameter values. Basically we can use as many different combinations of parameters as we can imagine with a single signature - the only mandatory is that we need to maintain correct types. Why did I tell all this? Because this is exactly how root signature works! We specify the input parameters and their types and later during runtime we call the function passing the actual data.

As you remember we have 4 resources for our demo - hull constant buffer, domain constant buffer and 2 domain structured buffers.

“But there’re also vertex and index buffers” - somebody can ask. Right, but they are special buffers - we need to create resources and corresponding views and pass this views directly to the pipeline in command list (as we’ll see later). These views don’t even need a resource heap!

Also as we saw previously, the information about this resources should be stored in descriptors which should be stored in descriptor heaps. But I also mentioned that there’re some other ways to pass data around. That’s how we’ll do it in our demo:

-

Tesselation factors for the hull shader we’ll pass directly in root signature. That means we don’t need to create descriptor or descriptor heap or even resource itself! This works because we can pass

32bitconstants in root signature and they appear in shader as a constant buffer. Since we have only2tesselation factors this type of passing looks like a good choice. Moreover, this data will be accessed in a shader without indirection, just likestd::array<int, 2>in an example c++ function signature! -

For domain shader’s constant buffer we will use a descriptor. But this decriptor will be passed as a part of the root signature. And that means we can bypass a descriptor heap. The descriptor will be inlined in the root signature - that’s why we don’t need to store it somewhere else. With root descriptor the shader first will read resource’s address and than read the actual data. Just like

XMFLOAT4X4*in an example c++ function signature! -

For domain shader’s structured buffers we will finaly use descriptor and descriptor heaps. That means we need to create a descriptor heap to hold

2descriptors (one for every buffer) and desciptors itself. In order to pass information to the root signature we need to pack it to descriptor table. Descriptor table just tells which descriptor heap to use and the number of descriptors. When we need to access a buffer in a shader the runtime will first read the table, next will read the descriptor and finally will read the actual data. Just likestd::vector<XMFLOAT3*>*in an example c++ function signature!

“Why do we need to use descriptors or tables if we can pass everything as root constants?” Root signature has a very limited size -

64DWORD(1DWORD==32bit). That means we can store64ints inside it, or4matrices. If there’s not enough place the data will be stored somewhere else and it will add one more level of indirection. Root descriptor asks for2DWORDand table only1DWORD.

Interesting note - Nvidia guys recommend to use root descriptors as much as you can. But AMD guys recommend to use tables.

Remember that signature doesn’t define any parameters - it just declares the type and the order. The actual data will be passed later.

Knowing all this we can write our first directx 12 code.

// TeapotTutorial.h

Microsoft::WRL::ComPtr<ID3D12RootSignature> rootSignature;

// TeapotTutorial.cpp

void TeapotTutorial::createRootSignature()

{

// #1

D3D12_DESCRIPTOR_RANGE dsTransformAndColorSrvRange;

ZeroMemory(&dsTransformAndColorSrvRange, sizeof(dsTransformAndColorSrvRange));

dsTransformAndColorSrvRange.RangeType = D3D12_DESCRIPTOR_RANGE_TYPE_SRV; // we're using structured buffers - it's a SRV

dsTransformAndColorSrvRange.NumDescriptors = 2; // we have 2 structured buffers and 2 descriptors

dsTransformAndColorSrvRange.BaseShaderRegister = 0; // we start from the first register (t0)

dsTransformAndColorSrvRange.RegisterSpace = 0; // this allows us to use the same register name if we use different space

dsTransformAndColorSrvRange.OffsetInDescriptorsFromTableStart = D3D12_DESCRIPTOR_RANGE_OFFSET_APPEND;

// #2

D3D12_ROOT_PARAMETER dsTransformAndColorSrv;

ZeroMemory(&dsTransformAndColorSrv, sizeof(dsTransformAndColorSrv));

dsTransformAndColorSrv.ParameterType = D3D12_ROOT_PARAMETER_TYPE_DESCRIPTOR_TABLE;

dsTransformAndColorSrv.DescriptorTable = { 1, &dsTransformAndColorSrvRange }; // one range

dsTransformAndColorSrv.ShaderVisibility = D3D12_SHADER_VISIBILITY_DOMAIN; // only used in domain shader

//#3

D3D12_ROOT_PARAMETER dsObjCb;

ZeroMemory(&dsObjCb, sizeof(dsObjCb));

dsObjCb.ParameterType = D3D12_ROOT_PARAMETER_TYPE_CBV; // constant buffer

dsObjCb.Descriptor = { 0, 0 }; // first register (b0) in first register space

dsObjCb.ShaderVisibility = D3D12_SHADER_VISIBILITY_DOMAIN; // only used in domain shader

// #4

D3D12_ROOT_PARAMETER hsTessFactorsCb;

ZeroMemory(&hsTessFactorsCb, sizeof(hsTessFactorsCb));

hsTessFactorsCb.ParameterType = D3D12_ROOT_PARAMETER_TYPE_32BIT_CONSTANTS;

hsTessFactorsCb.Constants = { 0, 0, 2 }; // 2 constants in first register (b0) in first register space

hsTessFactorsCb.ShaderVisibility = D3D12_SHADER_VISIBILITY_HULL; // only used in hull shader

vector<D3D12_ROOT_PARAMETER> rootParameters{ dsObjCb, hsTessFactorsCb, dsTransformAndColorSrv };

// #5

D3D12_ROOT_SIGNATURE_FLAGS rootSignatureFlags{

D3D12_ROOT_SIGNATURE_FLAG_ALLOW_INPUT_ASSEMBLER_INPUT_LAYOUT | // we're using vertex and index buffers

D3D12_ROOT_SIGNATURE_FLAG_DENY_VERTEX_SHADER_ROOT_ACCESS |

D3D12_ROOT_SIGNATURE_FLAG_DENY_GEOMETRY_SHADER_ROOT_ACCESS |

D3D12_ROOT_SIGNATURE_FLAG_DENY_PIXEL_SHADER_ROOT_ACCESS

};

// #6

D3D12_ROOT_SIGNATURE_DESC rootSignatureDesc;

ZeroMemory(&rootSignatureDesc, sizeof(rootSignatureDesc));

rootSignatureDesc.NumParameters = static_cast<UINT>(rootParameters.size());

rootSignatureDesc.pParameters = rootParameters.data();

rootSignatureDesc.NumStaticSamplers = 0; // samplers can be stored in root signature separately and consume no space

rootSignatureDesc.pStaticSamplers = nullptr; // we're not using texturing

rootSignatureDesc.Flags = rootSignatureFlags;

// #7

ComPtr<ID3DBlob> signature;

ComPtr<ID3DBlob> error;

if (FAILED(D3D12SerializeRootSignature(&rootSignatureDesc, D3D_ROOT_SIGNATURE_VERSION_1, signature.ReleaseAndGetAddressOf(), error.ReleaseAndGetAddressOf())))

{

throw(runtime_error{ "Error serializing root signature" });

}

// finally create the root signature

// #8

if (FAILED(device->CreateRootSignature(0, signature->GetBufferPointer(), signature->GetBufferSize(), IID_PPV_ARGS(rootSignature.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating root signature" });

}

}We’re using 3 root parameters: root descriptor for domain shader’s constant buffer, 2 root constants for hull shader’s constant buffer and a descriptor table for 2 structured buffers.

First we’re creating a descriptor table - for this we need to specify the range of descriptors (#1) we’ll use and pass this range to the parameter description (#2). Next we’re creating a root descriptor for the domain constant buffer (#3). The final parameter is our root constants (#4). Notice how we specified shader visibility for each parameter. The api will validate this input and say us if there’s something wrong. Also notice how we excluded certain stages from accessing root signature (#5) - this is recommended. Next we’re creating root signature description struct (#6) with all the information we have so far and serializing it (#7). The last step is necessary because there’s another way to create a root signature - directly in shader, not c++ app. And finally we’re creating our root signature.

Directx team kindly provided a helper header that simplifies creation of different structures -

d3dx12.h. Thought the header is not a part ofdirectx 12it’s well documented inmsdnand pretty solid. TheD3D12_DESCRIPTOR_RANGEcreation can be replaced withCD3DX12_DESCRIPTOR_RANGE,D3D12_ROOT_PARAMETERwithCD3DX12_ROOT_PARAMETERandD3D12_ROOT_SIGNATURE_DESCwithCD3DX12_ROOT_SIGNATURE_DESC. Using this helpers allow us to reduce and hence simplify code dramatically. I deliberatelly removed alld3dx12.hdependencies from my code just to show how api works under the hood.

When we serialize the signature we can get errors which will be writtent to error instance. There’re a lot of checks happens during serialization - for example if we’ll overlap registers for the same shader (have two b0) we’ll get an error. Very handy tool!

Now when we know about root signature we can update our diagram:

It’s a little bit messy but if you’ll follow arrows you’ll see that it’s the same as the code. Notice how hull shader constant buffer went away (because we’re using inlined root constants) and descriptor heap for structured buffers appeared. There’s still some mistery left, namely the size of the domain constant buffer (you’ll learn about this later).

The last method - ID3D12Device::CreateRootSignature - uses some device that we don’t know yet. This is a software representation of the hardware and we’ll find how to create one in the next section.

DirectX Initialization

As you remember the base initialization is done in the base class called Graphics. This is how we create a device:

// Graphics.h

Microsoft::WRL::ComPtr<ID3D12Device> device;

// Graphics.cpp

void Graphics::createDevice()

{

if (FAILED(D3D12CreateDevice(adapter.Get(), D3D_FEATURE_LEVEL_11_0, IID_PPV_ARGS(&device))))

{

throw(runtime_error{ "Error creating device." });

}

}Simple enough. But what is this adapter? We can use nullptr instead and let the api to choose the default adapter, but let’s see how we can select among many adapters that exist in our system. IDXGIAdapter is similar to ID3D12Device interface - it also a presentation of a gpu. It’s hard for me to tell why do we need two similar interfaces that basically represent the same thing. Let’s think that dxgi interface provides different information about a gpu itself (vendor, name etc.), but d3d interface allows us to manipulate it - create different resources, change states.

// Graphics.h

Microsoft::WRL::ComPtr<IDXGIAdapter3> adapter;

// Graphics.cpp

void Graphics::getAdapter()

{

ComPtr<IDXGIAdapter1> adapterTemp;

for (UINT adapterIndex{ 0 }; factory->EnumAdapters1(adapterIndex, adapterTemp.ReleaseAndGetAddressOf()) != DXGI_ERROR_NOT_FOUND; ++adapterIndex)

{

DXGI_ADAPTER_DESC1 desc;

ZeroMemory(&desc, sizeof(desc));

adapterTemp->GetDesc1(&desc);

if (desc.Flags & DXGI_ADAPTER_FLAG_SOFTWARE)

{

continue;

}

if (SUCCEEDED(adapterTemp.As(&adapter)))

{

break;

}

}

if (adapter == nullptr)

{

throw(runtime_error{ "Error getting an adapter." });

}

}Here we just grab the first adapter that is not software (starting from Windows 8 there’s always a software adapter presented in the system). But you can use different logic - like checking a vendor. For enumerating we’re using some factory which is IDXGIFactory interface. So let’s create it too:

// Graphics.h

Microsoft::WRL::ComPtr<IDXGIFactory4> factory;

// Graphics.cpp

void Graphics::createFactory()

{

#if defined(_DEBUG)

ComPtr<ID3D12Debug> debugController;

if (SUCCEEDED(D3D12GetDebugInterface(IID_PPV_ARGS(&debugController))))

{

debugController->EnableDebugLayer();

}

#endif

UINT factoryFlags{ 0 };

#if _DEBUG

factoryFlags = DXGI_CREATE_FACTORY_DEBUG;

#endif

if (FAILED(CreateDXGIFactory2(factoryFlags, IID_PPV_ARGS(factory.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating IDXGIFactory." });

}

}Finally no more new dependent interfaces! Thought there’s one which we not depend on - ID3D12Debug. You should always use it with debug configuration. During an error it writes detailed message to the output.

Now we can compile the code we have successfully thought we’ll not see anything on the screen. That’s one of the downside of programming with directx - we can’t have some intermediate results like render only one triangle from the teapot or shade only one pixel. We need to write a lot of code for both cpu and gpu just to find the black screen or artifacts.

At this point we have defined shaders and a signature. But the gpu doesn’t know about our shaders - we only have several text files that are useful for us - not the hardware. As you have guessed we need to load our shaders to the graphics card. But first we need to compile them. Later we’ll use a new addtition to the api which allows us to send this compiled data (and a lot of other stuff) to the gpu - pipeline state object (or pso for short).

Pipeline State Object

As you know the gpu is a state machine - once it’s setted up it will do the same actions over and over again until we change a state. In directx 12 the entire gpu state (plus or minus some minor things) is represented by ID3D12PipelineState interface. This means that if you want to render the same object in wireframe and solid you have to create 2 such objects which will differ only by fill mode. State creation is a heavy operation that should be avoided in runtime. Instead all states that you need for your scene should be created as a part of initialization.

In our demo we’ll use 2 states - one for solid rendering and backface culling and another for wireframe rendering and without culling. Creating a state means filling a lot of structures and setting shaders. We’re compiling our shaders as a build process in Visual Studio. This means that during application start we should have cso files somewhere which we need to load. The loading is very simple and can be done like this:

// TeapotTutorial.h

Microsoft::WRL::ComPtr<ID3DBlob> vertexShaderBlob;

Microsoft::WRL::ComPtr<ID3DBlob> hullShaderBlob;

Microsoft::WRL::ComPtr<ID3DBlob> domainShaderBlob;

Microsoft::WRL::ComPtr<ID3DBlob> pixelShaderBlob;

// TeapotTutorial.cpp

void TeapotTutorial::createShaders()

{

if (FAILED(D3DReadFileToBlob(L"VertexShader.cso", vertexShaderBlob.ReleaseAndGetAddressOf())))

{

throw(runtime_error{ "Error reading vertex shader." });

}

if (FAILED(D3DReadFileToBlob(L"HullShader.cso", hullShaderBlob.ReleaseAndGetAddressOf())))

{

throw(runtime_error{ "Error reading hull shader." });

}

if (FAILED(D3DReadFileToBlob(L"DomainShader.cso", domainShaderBlob.ReleaseAndGetAddressOf())))

{

throw(runtime_error{ "Error reading domain shader." });

}

if (FAILED(D3DReadFileToBlob(L"PixelShader.cso", pixelShaderBlob.ReleaseAndGetAddressOf())))

{

throw(runtime_error{ "Error reading pixel shader." });

}

}And now the pipeline state creation (remember - we have 2 states):

// TeapotTutorial.h

Microsoft::WRL::ComPtr<ID3D12PipelineState> pipelineStateWireframe;

Microsoft::WRL::ComPtr<ID3D12PipelineState> pipelineStateSolid;

Microsoft::WRL::ComPtr<ID3D12PipelineState> currPipelineState;

// TeapotTutorial.cpp

void TeapotTutorial::createPipelineStateWireframe()

{

pipelineStateWireframe = createPipelineState(D3D12_FILL_MODE_WIREFRAME, D3D12_CULL_MODE_NONE);

currPipelineState = pipelineStateWireframe;

}

void TeapotTutorial::createPipelineStateSolid()

{

pipelineStateSolid = createPipelineState(D3D12_FILL_MODE_SOLID, D3D12_CULL_MODE_NONE);

}

ComPtr<ID3D12PipelineState> TeapotTutorial::createPipelineState(D3D12_FILL_MODE fillMode, D3D12_CULL_MODE cullMode)

{

// #1

vector<D3D12_INPUT_ELEMENT_DESC> inputElementDescs

{

{ "POSITION", 0, DXGI_FORMAT_R32G32B32_FLOAT, 0, 0, D3D12_INPUT_CLASSIFICATION_PER_VERTEX_DATA, 0 }

};

// #2

D3D12_RASTERIZER_DESC rasterizerDesc;

ZeroMemory(&rasterizerDesc, sizeof(rasterizerDesc));

rasterizerDesc.FillMode = fillMode;

rasterizerDesc.CullMode = cullMode;

rasterizerDesc.FrontCounterClockwise = FALSE;

rasterizerDesc.DepthBias = D3D12_DEFAULT_DEPTH_BIAS;

rasterizerDesc.DepthBiasClamp = D3D12_DEFAULT_DEPTH_BIAS_CLAMP;

rasterizerDesc.SlopeScaledDepthBias = D3D12_DEFAULT_SLOPE_SCALED_DEPTH_BIAS;

rasterizerDesc.DepthClipEnable = TRUE;

rasterizerDesc.MultisampleEnable = FALSE;

rasterizerDesc.AntialiasedLineEnable = FALSE;

rasterizerDesc.ForcedSampleCount = 0;

rasterizerDesc.ConservativeRaster = D3D12_CONSERVATIVE_RASTERIZATION_MODE_OFF;

// #3

D3D12_BLEND_DESC blendDesc;

ZeroMemory(&blendDesc, sizeof(blendDesc));

blendDesc.AlphaToCoverageEnable = FALSE;

blendDesc.IndependentBlendEnable = FALSE;

blendDesc.RenderTarget[0] = {

FALSE,FALSE,

D3D12_BLEND_ONE, D3D12_BLEND_ZERO, D3D12_BLEND_OP_ADD,

D3D12_BLEND_ONE, D3D12_BLEND_ZERO, D3D12_BLEND_OP_ADD,

D3D12_LOGIC_OP_NOOP,

D3D12_COLOR_WRITE_ENABLE_ALL

};

// #4

D3D12_DEPTH_STENCIL_DESC depthStencilDesc;

ZeroMemory(&depthStencilDesc, sizeof(depthStencilDesc));

depthStencilDesc.DepthEnable = TRUE;

depthStencilDesc.DepthWriteMask = D3D12_DEPTH_WRITE_MASK_ALL;

depthStencilDesc.DepthFunc = D3D12_COMPARISON_FUNC_LESS;

depthStencilDesc.StencilEnable = FALSE;

depthStencilDesc.StencilReadMask = D3D12_DEFAULT_STENCIL_READ_MASK;

depthStencilDesc.StencilWriteMask = D3D12_DEFAULT_STENCIL_WRITE_MASK;

const D3D12_DEPTH_STENCILOP_DESC defaultStencilOp = { D3D12_STENCIL_OP_KEEP, D3D12_STENCIL_OP_KEEP, D3D12_STENCIL_OP_KEEP, D3D12_COMPARISON_FUNC_ALWAYS };

depthStencilDesc.FrontFace = defaultStencilOp;

depthStencilDesc.BackFace = defaultStencilOp;

// #5

D3D12_GRAPHICS_PIPELINE_STATE_DESC pipelineStateDesc;

ZeroMemory(&pipelineStateDesc, sizeof(pipelineStateDesc));

pipelineStateDesc.InputLayout = { inputElementDescs.data(), static_cast<UINT>(inputElementDescs.size()) };

pipelineStateDesc.pRootSignature = rootSignature.Get();

pipelineStateDesc.VS = { vertexShaderBlob->GetBufferPointer(), vertexShaderBlob->GetBufferSize() };

pipelineStateDesc.HS = { hullShaderBlob->GetBufferPointer(), hullShaderBlob->GetBufferSize() };

pipelineStateDesc.DS = { domainShaderBlob->GetBufferPointer(), domainShaderBlob->GetBufferSize() };

pipelineStateDesc.PS = { pixelShaderBlob->GetBufferPointer(), pixelShaderBlob->GetBufferSize() };

pipelineStateDesc.RasterizerState = rasterizerDesc;

pipelineStateDesc.BlendState = blendDesc;

pipelineStateDesc.DepthStencilState = depthStencilDesc;

pipelineStateDesc.SampleMask = UINT_MAX;

pipelineStateDesc.PrimitiveTopologyType = D3D12_PRIMITIVE_TOPOLOGY_TYPE_PATCH;

pipelineStateDesc.NumRenderTargets = 1;

pipelineStateDesc.RTVFormats[0] = DXGI_FORMAT_R8G8B8A8_UNORM;

pipelineStateDesc.DSVFormat = DXGI_FORMAT_D32_FLOAT;

pipelineStateDesc.SampleDesc.Count = 1;

ComPtr<ID3D12PipelineState> pipelineState;

if (FAILED(device->CreateGraphicsPipelineState(&pipelineStateDesc, IID_PPV_ARGS(pipelineState.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating pipeline state." });

}

return pipelineState;

}Wow, that’s a lot of code. Let’s step through the code line by line. First we create input layout (#1). In vertex shader we’re expecting only one input - the control point position so we have only one entry in D3D12_INPUT_ELEMENT_DESC vector. Next we’re creating a rasterizer state (#2). This structure can be replaced with a helper CD3DX12_RASTERIZER_DESC to make it shorter. Next is blend (#) - it can be replaced with CD3DX12_BLEND_DESC. Next is depth stencil (#4 and CD3DX12_DEPTH_STENCIL_DESC). And finally pipeline state object itself where we assign all the things we created (#5). All these structures are pretty simple and I think it should be clear from the names what each field represent so I won’t describe it in detail.

Interesting thing - thought we assigned a root signature to pso this assignmend done only for validation, i.e. the api will check that shader inputs correspond to signature parameters. After pipeline state creation the information about root signature is lost and we need to assign it again before drawing.

Yay, we have shaders, we have signature! But we still don’t have resources. Let’s fix that.

Creating Resources

Let’s recall what resources do we need:

- Vertex Buffer

- Index Buffer

- Domain Constant Buffer

- Transforms Structured Buffer

- Colors Structured Buffer

Before we start to create this buffers let’s understand how gpu stores resources. Similar to descriptors resources are stored in a memory called resource heap. There’re several types of heaps, but we’ll use only two - D3D12_HEAP_TYPE_DEFAULT and D3D12_HEAP_TYPE_UPLOAD. The first one is entirely gpu resident - once you create it you can’t access it on cpu side - even upload initial data. This heap type highly optimized and is faster than others. The second one is accessible by both gpu and cpu. We need a default heap when we have a static data - vertex and index buffers are good candidates. The upload heap is good when we change data every frame - for example a constant buffer. But if we can’t write data to default buffer how can we use it? We can use some intermediate upload buffer, write data there from cpu and give a command to the gpu to copy the data from upload to default. I wrote “give a command” - yes, that’t how we communicate with the gpu - we write predefined commands to some list and send this list to the graphic card where it executes.

All buffer instantiations are in constructor of our demo class.

Vertex Buffer

This is a special buffer that doesn’t requires a descriptor heap (but still requires a descriptor/view).

// TeapotTutorial.h

Microsoft::WRL::ComPtr<ID3D12Resource> controlPointsBuffer;

// TeapotTutorial.cpp

controlPointsBuffer = teapot_tutorial::createVertexBuffer(device.Get(), TeapotData::points, L"control points");Looks simple, but it’s not. Here we can see a helper function teapot_tutorial::createVertexBuffer() which takes a device (remember, we need it to create almost everything for the application), a data and a buffer name. The last parameter is super helpful during debugging - in visual studio graphics debugger we can easily find our buffer knowing it’s name. This helper function lives in a helper header called Utils.h (surprise surprise) and this is how it’s defined:

// Utils.h

template<typename T>

Microsoft::WRL::ComPtr<ID3D12Resource> createVertexBuffer(ID3D12Device* device, const std::vector<T>& data, std::wstring name = L"")

{

return details::createDefaultBuffer(device, data, D3D12_RESOURCE_STATE_VERTEX_AND_CONSTANT_BUFFER, name);

}This function calls another helper function - createDefaultBuffer();

// Utils.h

template<typename T>

Microsoft::WRL::ComPtr<ID3D12Resource> createDefaultBuffer(ID3D12Device* device, const std::vector<T>& data, D3D12_RESOURCE_STATES finalState, std::wstring name = L"")

{

UINT elementSize{ static_cast<UINT>(sizeof(T)) };

UINT bufferSize{ static_cast<UINT>(data.size() * elementSize) };

// #1

D3D12_HEAP_PROPERTIES heapProps;

ZeroMemory(&heapProps, sizeof(heapProps));

heapProps.Type = D3D12_HEAP_TYPE_DEFAULT;

heapProps.CPUPageProperty = D3D12_CPU_PAGE_PROPERTY_UNKNOWN;

heapProps.MemoryPoolPreference = D3D12_MEMORY_POOL_UNKNOWN;

heapProps.CreationNodeMask = 1;

heapProps.VisibleNodeMask = 1;

// #2

D3D12_RESOURCE_DESC resourceDesc;

ZeroMemory(&resourceDesc, sizeof(resourceDesc));

resourceDesc.Dimension = D3D12_RESOURCE_DIMENSION_BUFFER;

resourceDesc.Alignment = 0;

resourceDesc.Width = bufferSize;

resourceDesc.Height = 1;

resourceDesc.DepthOrArraySize = 1;

resourceDesc.MipLevels = 1;

resourceDesc.Format = DXGI_FORMAT_UNKNOWN;

resourceDesc.SampleDesc.Count = 1;

resourceDesc.SampleDesc.Quality = 0;

resourceDesc.Layout = D3D12_TEXTURE_LAYOUT_ROW_MAJOR;

resourceDesc.Flags = D3D12_RESOURCE_FLAG_NONE;

// #3

Microsoft::WRL::ComPtr<ID3D12Resource> defaultBuffer;

HRESULT hr{ device->CreateCommittedResource(

&heapProps,

D3D12_HEAP_FLAG_NONE,

&resourceDesc,

D3D12_RESOURCE_STATE_COPY_DEST,

nullptr,

IID_PPV_ARGS(defaultBuffer.ReleaseAndGetAddressOf())) };

if (FAILED(hr))

{

throw(runtime_error{ "Error creating a default buffer." });

}

defaultBuffer->SetName(name.c_str());

heapProps.Type = D3D12_HEAP_TYPE_UPLOAD;

// #4

Microsoft::WRL::ComPtr<ID3D12Resource> uploadBuffer;

hr = device->CreateCommittedResource(

&heapProps,

D3D12_HEAP_FLAG_NONE,

&resourceDesc,

D3D12_RESOURCE_STATE_GENERIC_READ,

nullptr,

IID_PPV_ARGS(uploadBuffer.ReleaseAndGetAddressOf()));

if (FAILED(hr))

{

throw(runtime_error{ "Error creating an upload buffer." });

}

// #5

ComPtr<ID3D12CommandAllocator> commandAllocator;

if (FAILED(device->CreateCommandAllocator(D3D12_COMMAND_LIST_TYPE_DIRECT, IID_PPV_ARGS(commandAllocator.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating a command allocator." });

}

// #6

Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList> commandList;

if (FAILED(device->CreateCommandList(0, D3D12_COMMAND_LIST_TYPE_DIRECT, commandAllocator.Get(), nullptr, IID_PPV_ARGS(commandList.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating a command list." });

}

// #7

D3D12_COMMAND_QUEUE_DESC queueDesc;

ZeroMemory(&queueDesc, sizeof(queueDesc));

queueDesc.Type = D3D12_COMMAND_LIST_TYPE_DIRECT;

queueDesc.Priority = D3D12_COMMAND_QUEUE_PRIORITY_NORMAL;

queueDesc.Flags = D3D12_COMMAND_QUEUE_FLAG_NONE;

queueDesc.NodeMask = 0;

Microsoft::WRL::ComPtr<ID3D12CommandQueue> commandQueue;

if (FAILED(device->CreateCommandQueue(&queueDesc, IID_PPV_ARGS(commandQueue.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating a command queue." });

}

// #8

void* pData;

if (FAILED(uploadBuffer->Map(0, NULL, &pData)))

{

throw(runtime_error{ "Failed map intermediate resource." });

}

memcpy(pData, data.data(), bufferSize);

uploadBuffer->Unmap(0, NULL);

// #9

commandList->CopyBufferRegion(defaultBuffer.Get(), 0, uploadBuffer.Get(), 0, bufferSize);

// #10

D3D12_RESOURCE_BARRIER barrierDesc;

ZeroMemory(&barrierDesc, sizeof(barrierDesc));

barrierDesc.Type = D3D12_RESOURCE_BARRIER_TYPE_TRANSITION;

barrierDesc.Flags = D3D12_RESOURCE_BARRIER_FLAG_NONE;

barrierDesc.Transition.pResource = defaultBuffer.Get();

barrierDesc.Transition.StateBefore = D3D12_RESOURCE_STATE_COPY_DEST;

barrierDesc.Transition.StateAfter = finalState;

barrierDesc.Transition.Subresource = D3D12_RESOURCE_BARRIER_ALL_SUBRESOURCES;

commandList->ResourceBarrier(1, &barrierDesc);

// #11

commandList->Close();

std::vector<ID3D12CommandList*> ppCommandLists{ commandList.Get() };

commandQueue->ExecuteCommandLists(static_cast<UINT>(ppCommandLists.size()), ppCommandLists.data());

// #12

UINT64 initialValue{ 0 };

Microsoft::WRL::ComPtr<ID3D12Fence> fence;

if (FAILED(device->CreateFence(initialValue, D3D12_FENCE_FLAG_NONE, IID_PPV_ARGS(fence.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating a fence." });

}

// #13

HANDLE fenceEventHandle{ CreateEvent(nullptr, FALSE, FALSE, nullptr) };

if (fenceEventHandle == NULL)

{

throw(runtime_error{ "Error creating a fence event." });

}

// #14

if (FAILED(commandQueue->Signal(fence.Get(), 1)))

{

throw(runtime_error{ "Error siganalling buffer uploaded." });

}

// #15

if (FAILED(fence->SetEventOnCompletion(1, fenceEventHandle)))

{

throw(runtime_error{ "Failed set event on completion." });

}

// #16

DWORD wait{ WaitForSingleObject(fenceEventHandle, 10000) };

if (wait != WAIT_OBJECT_0)

{

throw(runtime_error{ "Failed WaitForSingleObject()." });

}

return defaultBuffer;

}Looks scary. But going step by step we’ll get familar with a lot of directx 12 concepts. In the top we’re creating a couple of structures that describe that we’re going to create a default heap (#1) and a buffer (#2). Notice that we’re not specifying the purpose of the buffer - we’re just declaring the size. In other words we’re asking for a certain amount of memory. Among other things notice that Alignment is 0. Remember, on previous diagramm we had 2 numbers for the resource size - the actual data size and the alignement size. We should specify 64KB for the buffer or 0 (which will set it to 64KB under the hood). We can use helper structures CD3DX12_HEAP_PROPERTIES and CD3DX12_RESOURCE_DESC here.

Next we’re calling ID3D12Device::CreateCommittedResource() method that actually reserves a memory (#3). This method asks the gpu to find a free space. There’re other methods for resource creation - for example we can use already reserved memory and create placed resource in it - just like a placement new operator in c++ (we’ll not use this in our demo).

Next we’re creating an intermediate resource (#4). The only difference is that now we’re asking for upload heap so we can write to it from the cpu.

Please note the 4th parameter of ``ID3D12Device::CreateCommittedResource()method. We usedD3D12_RESOURCE_STATE_COPY_DESTfor the default buffer andD3D12_RESOURCE_STATE_GENERIC_READfor upload. This are initial states of our resources. For the performance reasons gpu memory should be in some state when it's accessed. Upload buffer should be created withD3D12_RESOURCE_STATE_GENERIC_READstate. And in order to copy from the source to destination the destination should be inD3D12_RESOURCE_STATE_COPY_DEST`.

Now it’s time to make a step back and understand how cpu and gpu communicate with each other. Cpu tells gpu what to do via commands. There’s a special interface ID3D12GraphicsCommandList which have tons of methods and each method is an order to the gpu. Examples of such orders are ClearDepthStencilView() or DrawInstanced(). Command list is a cpu structure meaning that it knows nothing about gpu. The command list doesn’t create anything. Instead it uses another special interface - ID3D12CommandAllocator (#5). This object manages memory for commands and knows about gpu. This two interfaces work together - first we need to create an allocator and later tell command list to use this allocator for command memory allocation.

There’re several types of command lists - copy, compute, bundle. We’ll use D3D12_COMMAND_LIST_TYPE_DIRECT - this type can record commands of all mentioned types. As list and allocator tied together - they should have the same type.

When we create a list it is in a record state that means it’s ready to receive commands (#6). There’s also ID3D12GraphicsCommandList::Reset() method which allow us to use command list with different allocator.

When we have a list filled with commands we need to tell gpu to do some work. We do this with ID3D12CommandQueue interface (#7). It should be the same type as our list and allocator.

Next we’re mapping the system memory to the upload buffer (#8) and creating out first command with ID3D12GraphicsCommandList::CopyBufferRegion() (#9). It will tell the gpu to copy bufferSize amount of data from upload buffer (which actually points to the system memory) to default buffer.

After we finished with a resource update we need to transition default buffer to the state that will allow correct access to it. For different resources this state is different. For example for constant or vertex buffer it should be D3D12_RESOURCE_STATE_VERTEX_AND_CONSTANT_BUFFER, for structured buffer - D3D12_RESOURCE_STATE_NON_PIXEL_SHADER_RESOURCE. So we creating a command that tells gpu to put a transition barrier to necessary state (#10). During this transition gpu will not touch the resource and will wait when transition is done. As you may guess this is an expensive operation. We can use helper structure CD3DX12_RESOURCE_BARRIER here.

Finally we’re telling gpu to execute our commands with ID3D12CommandQueue::ExecuteCommandLists () method. But before we need to close command list or we’ll get an error.

If we’ll exit createDefaultBuffer() method now we’ll get an undefined behavior. When we’re telling the gpu to execute a list it doesn’t start to do it immediately. Instead the commands are queued and nobody knows when they start or finish. That mean that if we leave now the upload buffer will be destroyed (we’re not keeping a pointer to it) and when the gpu will be ready to execute a copy command the source will not be valid anymore.

Previously we talked about cpu-gpu communication. Now we’re interested in gpu-cpu talk. We do it with fences. Fence is nothing more than an integer value. After we submitted a command list we can add one more command to the queue that will set the fence to the specified value. All we left to do is to check wherether our fence have a correct value or not and if it not - just wait untill it change. Super simple, isn’t it? First we’re creating ID3D12Fence itself (#12) and also some fenceEventHandle (#13). This handle is not a part of directx but winapi. We’re assigning value to the fence on the gpu with ID3D12CommandQueue::Signal() method (#14). The first parameter is a fence object and the second is a desired value we want out fence be after the command list executed. Next we’re setting an event on completion with ID3D12Fence::SetEventOnCompletion() method (#15). When the fence value will be equal to the first parameter then the event (second parameter) will be raised. In WaitForSingleObject() (#16) we’re waiting when this happens for specified number of seconds (10 seconds in our case but can be up to infinite). If at the moment of calling the fence already have a desired value it will return WAIT_OBJECT_0 immediately in other case it will wait.

Finally we’re returning created default buffer to the caller.

Now we need to create a view for our resource. Remember that resource is just a bunch of data in memory - we need to describe this data so the gpu can use it correctly.

// TeapotTutorial.h

D3D12_VERTEX_BUFFER_VIEW controlPointsBufferView;

// TeapotTutorial.cpp

using PointType = decltype(TeapotData::points)::value_type;

controlPointsBufferView.BufferLocation = controlPointsBuffer->GetGPUVirtualAddress();

controlPointsBufferView.StrideInBytes = static_cast<UINT>(sizeof(PointType));

controlPointsBufferView.SizeInBytes = static_cast<UINT>(controlPointsBufferView.StrideInBytes * TeapotData::points.size());Index Buffer

Similar to vertex buffer this buffer doesn’t require a descriptor heap.

// TeapotTutorial.h

Microsoft::WRL::ComPtr<ID3D12Resource> controlPointsIndexBuffer;

// TeapotTutorial.cpp

controlPointsIndexBuffer = teapot_tutorial::createIndexBuffer(device.Get(), TeapotData::patches, L"patches");And

// Utils.h

template<typename T>

Microsoft::WRL::ComPtr<ID3D12Resource> createIndexBuffer(ID3D12Device* device, const std::vector<T>& data, std::wstring name = L"")

{

return details::createDefaultBuffer(device, data, D3D12_RESOURCE_STATE_INDEX_BUFFER, name);

}Here we changed the final state of our buffer to D3D12_RESOURCE_STATE_INDEX_BUFFER. All other code remains the same as for the vertex buffer.

The view is also very simple:

// TeapotTutorial.h

D3D12_INDEX_BUFFER_VIEW controlPointsIndexBufferView;

// TeapotTutorial.cpp

controlPointsIndexBufferView.BufferLocation = controlPointsIndexBuffer->GetGPUVirtualAddress();

controlPointsIndexBufferView.Format = DXGI_FORMAT_R32_UINT;

controlPointsIndexBufferView.SizeInBytes = static_cast<UINT>(TeapotData::patches.size() * sizeof(uint32_t));Structured buffers

Resource creation for this buffers is also the same as for previous buffers:

// TeapotTutorial.h

Microsoft::WRL::ComPtr<ID3D12Resource> transformsBuffer;

Microsoft::WRL::ComPtr<ID3D12Resource> colorsBuffer;

// TeapotTutorial.cpp

transformsBuffer = teapot_tutorial::createStructuredBuffer(device.Get(), TeapotData::patchesTransforms, L"transforms");

colorsBuffer = teapot_tutorial::createStructuredBuffer(device.Get(), TeapotData::patchesColors, L"colors");Where createStructuredBuffer defined as here:

// Utils.h

template<typename T>

Microsoft::WRL::ComPtr<ID3D12Resource> createStructuredBuffer(ID3D12Device* device, const std::vector<T>& data, std::wstring name = L"")

{

return details::createDefaultBuffer(device, data, D3D12_RESOURCE_STATE_NON_PIXEL_SHADER_RESOURCE, name);

}This buffer finally needs a descriptor heap which we’re creating with the following code:

// TeapotTutorial.h

Microsoft::WRL::ComPtr<ID3D12DescriptorHeap> transformsAndColorsDescHeap;

// TeapotTutorial.cpp

void TeapotTutorial::createTransformsAndColorsDescHeap()

{

D3D12_DESCRIPTOR_HEAP_DESC heapDesc;

ZeroMemory(&heapDesc, sizeof(heapDesc));

heapDesc.NumDescriptors = 2;

heapDesc.Flags = D3D12_DESCRIPTOR_HEAP_FLAG_SHADER_VISIBLE;

heapDesc.Type = D3D12_DESCRIPTOR_HEAP_TYPE_CBV_SRV_UAV;

heapDesc.NodeMask = 0;

if (FAILED(device->CreateDescriptorHeap(&heapDesc, IID_PPV_ARGS(transformsAndColorsDescHeap.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating descriptor heap." });

}

}Here we’re specifying that we need a heap for 2 descriptors. Remember - we have 2 structured buffers - transforms and colors. Next we’re telling that we want this heap to be accessible from the shader. Examples of non shader visible heaps are render target view or stream output. Also we’re defining a type. Constant buffer, srv and uav descriptors can leave in the same heap and this is for good - having many heaps and switching between them is not performance friendly.

Now when we have a heap we need to fill it with descriptors.

// TeapotTutorial.cpp

using TransformType = decltype(TeapotData::patchesTransforms)::value_type;

using ColorType = decltype(TeapotData::patchesColors)::value_type;

teapot_tutorial::createSrv<TransformType>(device.Get(), transformsAndColorsDescHeap.Get(), 0, transformsBuffer.Get(), TeapotData::patchesTransforms.size());

teapot_tutorial::createSrv<ColorType>(device.Get(), transformsAndColorsDescHeap.Get(), 1, colorsBuffer.Get(), TeapotData::patchesColors.size());Here we’re calling a method from our Utils.h header:

// Utils.h

template<typename T>

void createSrv(ID3D12Device* device, ID3D12DescriptorHeap* descHeap, int offset, ID3D12Resource* resource, size_t numElements)

{

// #1

D3D12_SHADER_RESOURCE_VIEW_DESC srvDesc;

ZeroMemory(&srvDesc, sizeof(srvDesc));

srvDesc.Format = DXGI_FORMAT_UNKNOWN;

srvDesc.ViewDimension = D3D12_SRV_DIMENSION_BUFFER;

srvDesc.Shader4ComponentMapping = D3D12_DEFAULT_SHADER_4_COMPONENT_MAPPING;

srvDesc.Buffer.FirstElement = 0;

srvDesc.Buffer.NumElements = static_cast<UINT>(numElements);

srvDesc.Buffer.StructureByteStride = static_cast<UINT>(sizeof(T));

srvDesc.Buffer.Flags = D3D12_BUFFER_SRV_FLAG_NONE;

// #2

static UINT descriptorSize{ device->GetDescriptorHandleIncrementSize(D3D12_DESCRIPTOR_HEAP_TYPE_CBV_SRV_UAV) };

D3D12_CPU_DESCRIPTOR_HANDLE d{ descHeap->GetCPUDescriptorHandleForHeapStart() };

// #3

d.ptr += descriptorSize * offset;

// #4

device->CreateShaderResourceView(resource, &srvDesc, d);

}We’re describing a view with the struct D3D12_SHADER_RESOURCE_VIEW_DESC (#1). Since we can have arbitrary stride in structured buffer the format should defined as DXGI_FORMAT_UNKNOWN. Shader4ComponentMapping is a bit confusing for me - it looks like we can force some components be 0 or 1. We don’t need this so we’re using default mapping, but if you have information how this can be useful please write in comments. All other parameters are pretty strightforward.

Next we’re creating descriptor in the heap. Descritors for constant buffer, srv and uav have the same size (but can differ in size among hardware vendors) and we’re requesting this size with ID3D12Device::GetDescriptorHandleIncrementSize() method (#2). We’re searching the place in the heap where we can create a descriptor (#3). The very first descriptor we can put in the heap start but for the next descriptor we need to offset position by the size of the descriptor. And finally we’re asking the device to create specified descriptor in specified place (#4).

Constant buffers

The last resources left are constant buffers. If you refer back to theroot signature section you’ll recal that we don’t need resource and descriptor for tesselation factors for the hull shader since we’re storing constants directly in the signature. So nothing to do here, moving on to the next buffer.

Constant buffer for domain shader stores a matrix. Recall that we store descriptor for this resource in the root signature so no need in descriptor heap. But we still need a resource itself. If you’ll look at the diagram we drew before you’ll notice that we still don’t know the size of the buffer. Let’s figure out why.

As you remember the commands are stored in the queue and don’t execute immediately after submission. Cpu and gpu have different timelines. Now imagine that we submitted a matrix for frame 1 to constant buffer. Gpu is not executing yet. Now on cpu we’re executing frame 2 and we need to update the matrix. If we’ll write to the same place we did before the matrix from the frame 1 will be lost for the gpu. Or even worse - imagine that gpu starts reading the matrix at the moment we’re updating it. How can we fix this? We can do exactly how we did when we created a default buffer. We can put a fence and stall the cpu until gpu finishes reading the matrix. As you understand this is not the way to go - when the cpu work gpu is idle and vice versa even if cpu and gpu can complete their tasks with the same speed. The solution is to have several buffers - in thi cace the cpu can update buffers safely. This is the same reason why we have several back buffers - we’re displaying one while writing to another.

Several buffers is not a silver buller. There still can be a situation when the cpu is faster than gpu and we need to syncronize anyway.

So how much buffers do we need? Usually 2 or 3 is enough. In the demo I made this number adjustible but by default using 3. That means that we need to create 3 constant buffers. Or create one big buffer that can fit 3 matrices (remember that resource is just a blob of memory). This is how we create our resource:

// TeapotTutorial.h

Microsoft::WRL::ComPtr<ID3D12Resource> constBuffer;

// TeapotTutorial.cpp

void TeapotTutorial::createConstantBuffer()

{

UINT elementSizeAligned{ (sizeof(XMFLOAT4X4) + 255) & ~255 };

UINT64 bufferSize{ elementSizeAligned * bufferCount };

D3D12_HEAP_PROPERTIES heapProps;

ZeroMemory(&heapProps, sizeof(heapProps));

heapProps.Type = D3D12_HEAP_TYPE_UPLOAD;

heapProps.CPUPageProperty = D3D12_CPU_PAGE_PROPERTY_UNKNOWN;

heapProps.MemoryPoolPreference = D3D12_MEMORY_POOL_UNKNOWN;

heapProps.CreationNodeMask = 1;

heapProps.VisibleNodeMask = 1;

D3D12_RESOURCE_DESC resourceDesc;

ZeroMemory(&resourceDesc, sizeof(resourceDesc));

resourceDesc.Dimension = D3D12_RESOURCE_DIMENSION_BUFFER;

resourceDesc.Alignment = 0;

resourceDesc.Width = bufferSize;

resourceDesc.Height = 1;

resourceDesc.DepthOrArraySize = 1;

resourceDesc.MipLevels = 1;

resourceDesc.Format = DXGI_FORMAT_UNKNOWN;

resourceDesc.SampleDesc.Count = 1;

resourceDesc.SampleDesc.Quality = 0;

resourceDesc.Layout = D3D12_TEXTURE_LAYOUT_ROW_MAJOR;

resourceDesc.Flags = D3D12_RESOURCE_FLAG_NONE;

HRESULT hr{ device->CreateCommittedResource(

&heapProps,

D3D12_HEAP_FLAG_NONE,

&resourceDesc,

D3D12_RESOURCE_STATE_GENERIC_READ,

nullptr,

IID_PPV_ARGS(constBuffer.ReleaseAndGetAddressOf())

) };

if (FAILED(hr))

{

throw(runtime_error{ "Error creating constant buffer." });

}

constBuffer->SetName(L"constants");

}Since constant buffer will be updated every frame there’s no need to create it with default type. The read of constant buffer in directx 12 should be aligned by 256B. If we have 4x4 matrix of float which requires 16 * 4 = 64B we can’t place the next matrix immediately after it or we’ll break the alignment rule and will get an error. So our total size for 3 buffers will be 3 * 256 = 768B. And since constant buffer is just a usual buffer it will be aligned by 64KB. Finally we can finish our diagram.

The weird looking line

(sizeof(XMFLOAT4X4) + 255) & ~255calculates the next multiple of256.

One more time, since this is very important - in our case we have 1 buffer which can hold 3 matrices. In every frame we can safely update corresponding matrix. Let’s call this frames buffered frames. But if we have all 3 frames in flight (i.e. gpu not finished to render any of them yet) we can’t update out buffer and we have to wait.

At this point we have shaders, device and resources but we’re still not ready to draw. We don’t have a buffer to draw, a swap chain to present a back buffer and some other things.

DirectX Initialization part 2

You already know what are command lists, command allocator, fences - we used them when we created our resources. We need the same resources for rendering - after all rendering is just commands to the gpu what to do.

We could create one command list, allocator, fence for entire application and reuse it for rendering, resource creation but I decided to use more functional approach and for simplicity created an isolated function for resource creation.

Remember when we discussed matrix constant buffer we decided to use multiple buffers to avoid stalls and you know that we should use several resources for buffered frames (we’re using 3 frames by default but this number can be changed). So for rendering we need to use 3 command allocators, 3 fences but only one command list. This is because when we’re resetting a list it can be reused immediately - the memory for commands managed by the allocator. So we can reuse the same list with several allocators.

If we would use multiple threads for commands creation submission, we have to use

3 * numTheadsallocators andnumThreadslists. That’s because when the list is in use with particular allocator it can’t be used with another until it closed.

This is how we’re creating necessary data:

// Graphics.h

std::vector<Microsoft::WRL::ComPtr<ID3D12CommandAllocator>> commandAllocators;

Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList> commandList;

std::vector<Microsoft::WRL::ComPtr<ID3D12Fence>> fences;

std::vector<UINT64> fenceValues;

HANDLE fenceEventHandle;

// Graphics.cpp

void Graphics::createCommandAllocators()

{

for (UINT i{ 0 }; i < bufferCount; i++)

{

ComPtr<ID3D12CommandAllocator> commandAllocator;

if (FAILED(device->CreateCommandAllocator(D3D12_COMMAND_LIST_TYPE_DIRECT, IID_PPV_ARGS(commandAllocator.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating command allocator." });

}

commandAllocators.push_back(commandAllocator);

}

}

void Graphics::createCommandList()

{

if (FAILED(device->CreateCommandList(0, D3D12_COMMAND_LIST_TYPE_DIRECT, commandAllocators[0].Get(), nullptr, IID_PPV_ARGS(commandList.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating command list." });

}

if (FAILED(commandList->Close()))

{

throw(runtime_error{ "Error closing command list." });

}

}

void Graphics::createFences()

{

for (UINT i{ 0 }; i < bufferCount; i++)

{

UINT64 initialValue{ 0 };

ComPtr<ID3D12Fence> fence;

if (FAILED(device->CreateFence(initialValue, D3D12_FENCE_FLAG_NONE, IID_PPV_ARGS(fence.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating fence." });

}

fences.push_back(fence);

fenceValues.push_back(initialValue);

}

}

void Graphics::createFenceEventHandle()

{

fenceEventHandle = CreateEvent(nullptr, FALSE, FALSE, nullptr);

if (fenceEventHandle == NULL)

{

throw(runtime_error{ "Error creating fence event." });

}

}All this code should be already familar to you. Here we’re creating allocators, a list and we’re closing it because we’re not going to use it now, fences - one for each buffered frame and one handle. We need multiple fences by the same reason we need multiple allocators. Imagine we submitted commands for frame 1 and told the queue to set a fence after this frame. We do the same for frames 2 and 3. Now when we’re ready to reuse allocator 1 we need to check fence value 1 - not 2 or 3 (they still can be in use) and if the value is what we’re expecting we can safely reuse the memory. In other case we need to wait.

Next we’ll create command queue and swap chain:

// Graphics.h

Microsoft::WRL::ComPtr<ID3D12CommandQueue> commandQueue;

Microsoft::WRL::ComPtr<IDXGISwapChain3> swapChain;

// Graphics.cpp

void Graphics::createCommandQueue()

{

D3D12_COMMAND_QUEUE_DESC queueDesc;

ZeroMemory(&queueDesc, sizeof(queueDesc));

queueDesc.Type = D3D12_COMMAND_LIST_TYPE_DIRECT;

queueDesc.Priority = D3D12_COMMAND_QUEUE_PRIORITY_NORMAL;

queueDesc.Flags = D3D12_COMMAND_QUEUE_FLAG_NONE;

queueDesc.NodeMask = 0;

HRESULT hr{ device->CreateCommandQueue(&queueDesc, IID_PPV_ARGS(commandQueue.ReleaseAndGetAddressOf())) };

if (FAILED(hr))

{

throw(runtime_error{ "Error creating command queue." });

}

}

void Graphics::createSwapChain()

{

POINT wSize(window->getSize());

DXGI_SWAP_CHAIN_DESC1 swapChainDesc;

ZeroMemory(&swapChainDesc, sizeof(swapChainDesc));

swapChainDesc.Width = static_cast<UINT>(wSize.x);

swapChainDesc.Height = static_cast<UINT>(wSize.y);

swapChainDesc.Format = DXGI_FORMAT_R8G8B8A8_UNORM;

swapChainDesc.Stereo = FALSE;

swapChainDesc.SampleDesc = { 1, 0 }; // no anti-aliasing

swapChainDesc.BufferUsage = DXGI_USAGE_RENDER_TARGET_OUTPUT;

swapChainDesc.BufferCount = bufferCount;

swapChainDesc.Scaling = DXGI_SCALING_NONE;

swapChainDesc.SwapEffect = DXGI_SWAP_EFFECT_FLIP_DISCARD;

swapChainDesc.Flags = 0;

ComPtr<IDXGISwapChain1> swapChain1;

if (FAILED(factory->CreateSwapChainForHwnd(commandQueue.Get(), window->getHandle(), &swapChainDesc, nullptr, nullptr, swapChain1.ReleaseAndGetAddressOf())))

{

throw(runtime_error{ "Error creating IDXGISwapChain1." });

}

if (FAILED(swapChain1.As(&swapChain)))

{

throw(runtime_error{ "Error creating IDXGISwapChain3." });

}

}You should be familar with command queue. The swap chain concept didn’t change since directx 11 the only interesting thing is that you need to specify command queue during swap chain creation. Here bufferCount variable is a number of buffered frames.

Now it’s time to create back buffers:

// Graphics.h

std::vector<Microsoft::WRL::ComPtr<ID3D12Resource>> swapChainBuffers;

Microsoft::WRL::ComPtr<ID3D12DescriptorHeap> descHeapRtv;

// Graphics.cpp

void Graphics::getSwapChainBuffers()

{

for (UINT i{ 0 }; i < bufferCount; i++)

{

if (FAILED(swapChain->GetBuffer(i, IID_PPV_ARGS(swapChainBuffers[i].ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error getting buffer." });

}

}

}

void Graphics::createDescriptoprHeapRtv()

{

D3D12_DESCRIPTOR_HEAP_DESC heapDesc;

ZeroMemory(&heapDesc, sizeof(heapDesc));

heapDesc.Type = D3D12_DESCRIPTOR_HEAP_TYPE_RTV;

heapDesc.NumDescriptors = bufferCount;

heapDesc.NodeMask = 0;

heapDesc.Flags = D3D12_DESCRIPTOR_HEAP_FLAG_NONE;

if (FAILED(device->CreateDescriptorHeap(&heapDesc, IID_PPV_ARGS(descHeapRtv.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating descriptor heap." });

}

UINT rtvStep{ device->GetDescriptorHandleIncrementSize(D3D12_DESCRIPTOR_HEAP_TYPE_RTV) };

for (UINT i{ 0 }; i < bufferCount; i++)

{

D3D12_CPU_DESCRIPTOR_HANDLE d = descHeapRtv->GetCPUDescriptorHandleForHeapStart();

d.ptr += i * rtvStep;

device->CreateRenderTargetView(swapChainBuffers[i].Get(), nullptr, d);

}

}When we specified the number of back buffers in swap chain creation they become created implicitly so we don’t need to create resources manualy. But we still need to create descriptors for all our back buffers. In Graphics::getSwapChainBuffers() we’re obtaining pointers to created resources and in Graphics::createDescriptoprHeapRtv() we’re creating a descriptor heap (recal that descriptors should be stored somewhere) with the type D3D12_DESCRIPTOR_HEAP_TYPE_RTV and big enough to store necessary amount of views. Next we’re iterating over obtained buffer pointers and for every resource we’re creating a corresponding view (recal that ID3D12Device::GetDescriptorHandleIncrementSize is a cross vendor way to get descriptor size).

For our demo we also need a depth buffer:

// Graphics.h

Microsoft::WRL::ComPtr<ID3D12Resource> depthStencilBuffer;

Microsoft::WRL::ComPtr<ID3D12DescriptorHeap> descHeapDepthStencil;

// Graphics.cpp

void Graphics::createDepthStencilBuffer()

{

D3D12_CLEAR_VALUE depthOptimizedClearValue;

ZeroMemory(&depthOptimizedClearValue, sizeof(depthOptimizedClearValue));

depthOptimizedClearValue.Format = DXGI_FORMAT_D32_FLOAT;

depthOptimizedClearValue.DepthStencil.Depth = 1.0f;

depthOptimizedClearValue.DepthStencil.Stencil = 0;

POINT wSize(window->getSize());

D3D12_HEAP_PROPERTIES heapProps;

ZeroMemory(&heapProps, sizeof(heapProps));

heapProps.Type = D3D12_HEAP_TYPE_DEFAULT;

heapProps.CPUPageProperty = D3D12_CPU_PAGE_PROPERTY_UNKNOWN;

heapProps.MemoryPoolPreference = D3D12_MEMORY_POOL_UNKNOWN;

heapProps.CreationNodeMask = 1;

heapProps.VisibleNodeMask = 1;

D3D12_RESOURCE_DESC resourceDesc;

ZeroMemory(&resourceDesc, sizeof(resourceDesc));

resourceDesc.Dimension = D3D12_RESOURCE_DIMENSION_TEXTURE2D;

resourceDesc.Alignment = 0;

resourceDesc.Width = wSize.x;

resourceDesc.Height = wSize.y;

resourceDesc.DepthOrArraySize = 1;

resourceDesc.MipLevels = 0;

resourceDesc.Format = DXGI_FORMAT_D32_FLOAT;

resourceDesc.SampleDesc.Count = 1;

resourceDesc.SampleDesc.Quality = 0;

resourceDesc.Layout = D3D12_TEXTURE_LAYOUT_UNKNOWN;

resourceDesc.Flags = D3D12_RESOURCE_FLAG_ALLOW_DEPTH_STENCIL;

HRESULT hr{ device->CreateCommittedResource(

&heapProps,

D3D12_HEAP_FLAG_NONE,

&resourceDesc,

D3D12_RESOURCE_STATE_DEPTH_WRITE,

&depthOptimizedClearValue,

IID_PPV_ARGS(depthStencilBuffer.ReleaseAndGetAddressOf())

) };

if (FAILED(hr))

{

throw(runtime_error{ "Error creating depth stencil buffer." });

}

}

void Graphics::createDescriptorHeapDepthStencil()

{

D3D12_DESCRIPTOR_HEAP_DESC heapDesc;

ZeroMemory(&heapDesc, sizeof(heapDesc));

heapDesc.NumDescriptors = 1;

heapDesc.Type = D3D12_DESCRIPTOR_HEAP_TYPE_DSV;

heapDesc.Flags = D3D12_DESCRIPTOR_HEAP_FLAG_NONE;

if (FAILED(device->CreateDescriptorHeap(&heapDesc, IID_PPV_ARGS(descHeapDepthStencil.ReleaseAndGetAddressOf()))))

{

throw(runtime_error{ "Error creating depth stencil descriptor heap." });

}

D3D12_DEPTH_STENCIL_VIEW_DESC depthStencilViewDesc;

ZeroMemory(&depthStencilViewDesc, sizeof(depthStencilViewDesc));

depthStencilViewDesc.Format = DXGI_FORMAT_D32_FLOAT;

depthStencilViewDesc.ViewDimension = D3D12_DSV_DIMENSION_TEXTURE2D;

depthStencilViewDesc.Flags = D3D12_DSV_FLAG_NONE;

device->CreateDepthStencilView(depthStencilBuffer.Get(), &depthStencilViewDesc, descHeapDepthStencil->GetCPUDescriptorHandleForHeapStart());

}Nothing special here - we’re creating a resource heap with default type (depth buffer used by gpu without cpu access) and a texture resource. Field names are self explanatory - dimension, size, etc. In flags we’re specifying that we want to use this texture as depth stencil. And as we did million times already we`re creating a descriptor heap and a descriptor inside it.

Wow. Finally. We’re ready to draw!

Rendering

We have everything for our demo - all resources and infrastructure. Now we’ll call TeapotTutorial::render() method every frame where we’ll tell gpu to use resources and run shaders.

// TeapotTutorial.cpp

void TeapotTutorial::render()

{

// #1

UINT frameIndex{ swapChain->GetCurrentBackBufferIndex() };

// #2

ComPtr<ID3D12CommandAllocator> commandAllocator{ commandAllocators[frameIndex] };

if (FAILED(commandAllocator->Reset()))

{

throw(runtime_error{ "Error resetting command allocator." });

}

if (FAILED(commandList->Reset(commandAllocator.Get(), nullptr)))

{

throw(runtime_error{ "Error resetting command list." });

}

// #3

commandList->SetPipelineState(currPipelineState.Get());

commandList->SetGraphicsRootSignature(rootSignature.Get());

commandList->RSSetViewports(1, &viewport);

commandList->RSSetScissorRects(1, &scissorRect);

// #4

ID3D12Resource* currBuffer{ swapChainBuffers[frameIndex].Get() };

// #5

D3D12_RESOURCE_BARRIER barrierDesc;

ZeroMemory(&barrierDesc, sizeof(barrierDesc));

barrierDesc.Type = D3D12_RESOURCE_BARRIER_TYPE_TRANSITION;

barrierDesc.Transition.pResource = currBuffer;

barrierDesc.Transition.Subresource = D3D12_RESOURCE_BARRIER_ALL_SUBRESOURCES;

barrierDesc.Transition.StateBefore = D3D12_RESOURCE_STATE_PRESENT;

barrierDesc.Transition.StateAfter = D3D12_RESOURCE_STATE_RENDER_TARGET;

barrierDesc.Flags = D3D12_RESOURCE_BARRIER_FLAG_NONE;

commandList->ResourceBarrier(1, &barrierDesc);

// #6

static UINT descriptorSize{ device->GetDescriptorHandleIncrementSize(D3D12_DESCRIPTOR_HEAP_TYPE_RTV) };

D3D12_CPU_DESCRIPTOR_HANDLE descHandleRtv(descHeapRtv->GetCPUDescriptorHandleForHeapStart());

descHandleRtv.ptr += frameIndex * descriptorSize;

D3D12_CPU_DESCRIPTOR_HANDLE descHandleDepthStencil(descHeapDepthStencil->GetCPUDescriptorHandleForHeapStart());

commandList->OMSetRenderTargets(1, &descHandleRtv, FALSE, &descHandleDepthStencil);

// #7

static float clearColor[]{ 0.1f, 0.1f, 0.1f, 1.0f };

commandList->ClearRenderTargetView(descHandleRtv, clearColor, 0, nullptr);

commandList->ClearDepthStencilView(descHeapDepthStencil->GetCPUDescriptorHandleForHeapStart(), D3D12_CLEAR_FLAG_DEPTH, 1.0f, 0, 0, nullptr);

commandList->IASetPrimitiveTopology(D3D_PRIMITIVE_TOPOLOGY_16_CONTROL_POINT_PATCHLIST);

// #8

vector<D3D12_VERTEX_BUFFER_VIEW> myArray{ controlPointsBufferView };

commandList->IASetVertexBuffers(0, static_cast<UINT>(myArray.size()), myArray.data());

// #9

vector<int> rootConstants{ tessFactor, tessFactor };

commandList->SetGraphicsRoot32BitConstants(1, static_cast<UINT>(rootConstants.size()), rootConstants.data(), 0);

// #10

ID3D12DescriptorHeap* ppHeaps[] = { transformsAndColorsDescHeap.Get() };

commandList->SetDescriptorHeaps(1, ppHeaps);

D3D12_GPU_DESCRIPTOR_HANDLE d { transformsAndColorsDescHeap->GetGPUDescriptorHandleForHeapStart() };

d.ptr += 0;

commandList->SetGraphicsRootDescriptorTable(2, d);

// #11

POINT windowSize(window->getSize());

float ratio{ static_cast<float>(windowSize.x) / static_cast<float>(windowSize.y) };

XMMATRIX projMatrixDX{ XMMatrixPerspectiveFovLH(XMConvertToRadians(45), ratio, 1.0f, 100.0f) };

XMVECTOR camPositionDX(XMVectorSet(0.0f, 0.0f, -10.0f, 0.0f));

XMVECTOR camLookAtDX(XMVectorSet(0.0f, 0.0f, 0.0f, 0.0f));

XMVECTOR camUpDX(XMVectorSet(0.0f, 1.0f, 0.0f, 0.0f));

XMMATRIX viewMatrixDX{ XMMatrixLookAtLH(camPositionDX, camLookAtDX, camUpDX) };

XMMATRIX viewProjMatrixDX{ viewMatrixDX * projMatrixDX };

UINT constDataSizeAligned{ (sizeof(XMFLOAT4X4) + 255) & ~255 };

POINT mousePoint(window->getMousePosition());