At this point, we have shaders, static data is uploaded to the GPU, the pipelines are set up, and we’re almost ready to start to draw. Except that we don’t have an image where we can draw. An image is just memory, and this entire step is dedicated to these special images for drawing - swapchain images.

- Introduction

- Shaders

- Resources

- Pipelines

- Swapchain

- Drawing

- Depth Buffer

Technically speaking, we could create a usual image and draw into it. But after we finish there’s a question about how to display it on the screen? That is where OS-specific stuff comes onto the scene. An operating system can have a desktop manager which is responsible for windows compositing. It’s quite complicated and requires a Ph.D. to understand how all that works, but luckily we don’t need to go into the details to use it. What is important for us is the so-called swapchain. When we create one, Vulkan creates a necessary amount of images with all necessary parameters. Since this swapchain-thing is very platform-specific, it’s not in the core of the API and should be enabled via extensions. To enable it we add the extension name vk::ash::extensions::khr::Swapchain::name() (or simply "VK_KHR_swapchain" string) to the device extensions list in the main.rs which later will be used in vulkan_base to create a logical device.

Swapchain

We move to vulkan_base workspace. Since swapchain is a generic entity, it can be kept in the library. As was discussed before, swapchain-related functions are not part of the core and should be loaded separately. In C++, we do it by obtaining a function pointer, but in Rust with ash, we just create an object which knows where to find the code (like we did with DebugUtils and Surface). ash::khr::Swapchain is such an object. Here are all the new objects of the vulkan_base::VulkanBase struct:

pub swapchain_loader: khr::Swapchain,

pub surface_capabilities: vk::SurfaceCapabilitiesKHR,

pub surface_extent: vk::Extent2D,

pub swapchain: vk::SwapchainKHR,

pub swapchain_images: Vec<vk::Image>,

pub swapchain_image_views: Vec<vk::ImageView>,swapchain_loader- loader for swapchain functions. We create it in the vulkan_base::swapchain_loader function.surface_capabilities- well, surface capabilities. They hold some useful information such as min/max extent, min/max image count, and others. We get the capabilities in the vulkan_base::get_surface_capabilities function.surface_extent- we need to know the size of the surface to create a swapchain. In the vulkan_base::get_surface_extent we calculate the size. Notice how the code handles special values stored in capabilities. That is what the Specification says regarding this:

VkSurfaceCapabilitiesKHR::currentExtentis the current width and height of the surface, or the special value (0xFFFFFFFF, 0xFFFFFFFF) indicating that the surface size will be determined by the extent of a swapchain targeting the surface.

-

swapchain- the swapchain itself. We create it in the vulkan_base::create_swapchain function. Let’s go step by step with the parameters of theash::vk::SwapchainCreateInfoKHRinfo:surface- the object we obtained in the earlier step. It was needed to select a physical device, and now we need it again to create a swapchain.min_image_count- the minimum number of images the API should create when the swapchain is created. You probably heard about double or triple buffering. If we don’t want to stall, we need multiple images - while one of the images is presenting, we can draw to another. In the app, we want at least 3 images. There’s no guarantee that a GPU can handle this number, so we need to take surface capabilities into account.image_format- the format of the surface images. We already got it when got the physical device.image_color_space- got it as well when got the physical device.image_extent- discussed above.image_array_layers- we’re using a single layer.image_usage- the images will be used asash::vk::ImageUsageFlags::COLOR_ATTACHMENT, i.e. we’ll draw into it. Recall, when we created the render pass, we specified properties of a color attachment for the subpass. We want one of the swapchain images to be used in a render pass. We’ll see later how an image’s physical memory and render passes are bound together.image_sharing_mode- used with multiple queues. We have a single queue, henceash::vk::SharingMode::EXCLUSIVE.pre_transform- used on mobile devices and relates to a screen rotation. We’re not handling this anyhow in the app and just passing what we have in the capabilities.composite_alpha- in theory, we can make ourVulkanwindow semi-transparent on some systems. In the app we useash::vk::CompositeAlphaFlagsKHR::OPAQUE.present_mode- we already got it when got the physical device.clipped- tells how the OS will handle pixels that are hidden (by another overlapping window, for example).truemeans that the pixels can be used by other applications. Since we’re not reading back and/or examining pixels, we don’t care too much about this field.old_swapchain- it’s important to understand that every time we resize a window, an OS invalidates the images, and we need to recreate the swapchain. Providing the old swapchain can help in theory to reuse resources, speed up the creation.

The filled info structure we provide to

ash::extensions::khr::Swapchain::create_swapchainfunction. After that, we need to destroy the old swapchain if it existed. -

swapchain_images- if the swapchain creation was successful, we can get the images that the API created. We do it in the vulkan_base::get_swapchain_images function. -

swapchain_image_views- according to the Specification, image objects are not directly accessed by pipeline shaders for reading or writing image data. Instead, image views_representing contiguous ranges of the image subresources and containing additional metadata are used for that purpose. Views must be created on images of compatible types, and must represent a valid subset of image subresources.In other words, we don’t supply an image directly to an API function. We need a view instead. In the vulkan_base::create_swapchain_image_views we create views. In the create info struct, we specify an

imagewe want to create a view for,view_typeisash::vk::ImageType::TYPE_2D,formatis the same format as image format, incomponentswe can remap color channels, but we don’t do it in the app, and insubresource_rangewe specify which part of the image to use - our image holds color and has a single mip level and a single array layer. Finally, we call theash::Device::create_image_viewfunction to create an image view and repeat this for every swapchain image.

These were the steps to create a swapchain. As was discussed earlier, a new swapchain has to be recreated every time the window is resized. So we move all the code to the vaulkan_base::resize function.

NOTE: specifying the desired number of images during swapchain creation doesn’t mean that the runtime will create that exact amount of objects. Recall that the property is called

min_image_count(notice theminprefix), and the real number of images can be different from what we requested.

Framebuffers

I’m pretty sure that at this point, you already know that creating an object in Vulkan doesn’t mean that we’re done. With swapchain, we allocated the necessary amount of memory. Now, we need somehow to tell the API about these images' existence.

If we’d create a normal image to sample from the shader, we’d do the same things we did with buffers - create an image, allocate memory, bind image and memory, allocate a descriptor and update it with the image information, and finally bind the descriptor to a descriptor set. But when dealing with an image where we suppose to render, we make different steps. In the previous step, we created a render pass where we specified a single color attachment. Our swapchain image will serve as this attachment. In Vulkan, we use framebuffers to specify render targets, and we create them in the teapot::create_framebuffers function.

The first thing to note is that we need multiple framebuffers since we have multiple swapchain images - every framebuffer will refer to a different image. Next, we fill ash::vk::FramebufferCreateInfo struct:

render_pass- render passes operate in conjunction with framebuffers. Here we pass the render pass we created earlier.attachments- this is where we need an image. For every attachment in a render pass, we need to provide an image view. Recall that our render pass declared a single attachment, hence we’re setting a single view. Wonder what happens if we specify the wrong number of views?

Validation Error: vkCreateFramebuffer(): VkFramebufferCreateInfo attachmentCount of 2 does not match attachmentCount of 1 of VkRenderPass 0x1d000000001d[render pass] being used to create Framebuffer. The Vulkan spec states: attachmentCount must be equal to the attachment count specified in renderPass

widthandheight- we use the same size as the swapchain extent. Technically we can create a frame buffer smaller than the size of the attachments but not bigger.layers- we have a single layer.

We create a framebuffer with the ash::Device::create_framebuffer function.

Since framebuffers as render passes are application specific we add them to the VulkanData struct. And since the framebuffers depend on the swapchain images they need to be recreated every time the swapchain recreated. We hanle it in VulkanData::resize function.

Also, we add one more variable teapot::VulkanData::should_resize of bool type. We don’t want to react to a window size change immediately. For example, the resize event can happen multiple times per frame, so it would be a good idea to postpone the swapchain rebuild until necessary. For that, we mark that we need a new swapchain by setting should_resize to true, and just before rendering a frame, we check if we need to handle the resize event. This check happens in the main function in the loop. Here we check for the variable, and if it’s true, we resize both vulkan_base::VulkanBase (which rebuilds the swapchain) and teapot::VulkanData (which rebuilds framebuffers) structs.

Clean

As usual, in the teapot::clean function, we handle framebuffers, and in the [vulkan_base::clean] function, we handle the swapchain and the image views.

Conclusion

To present something in the window, we have to create special images. We do it by creating a swapchain. Together with a swapchain, Vulkan will create a necessary amount of swapchain images. All rendering happens in a render pass. A render pass has some sort of slots for different kinds of attachments (color, input, depth-stencil). We set the actual images to these slots via framebuffer. For every render target, we need a new framebuffer, but the render pass can be the same. If the window surface was changed, the swapchain should be recreated. Since framebuffers depend on swapchain images, they should be recreated too.

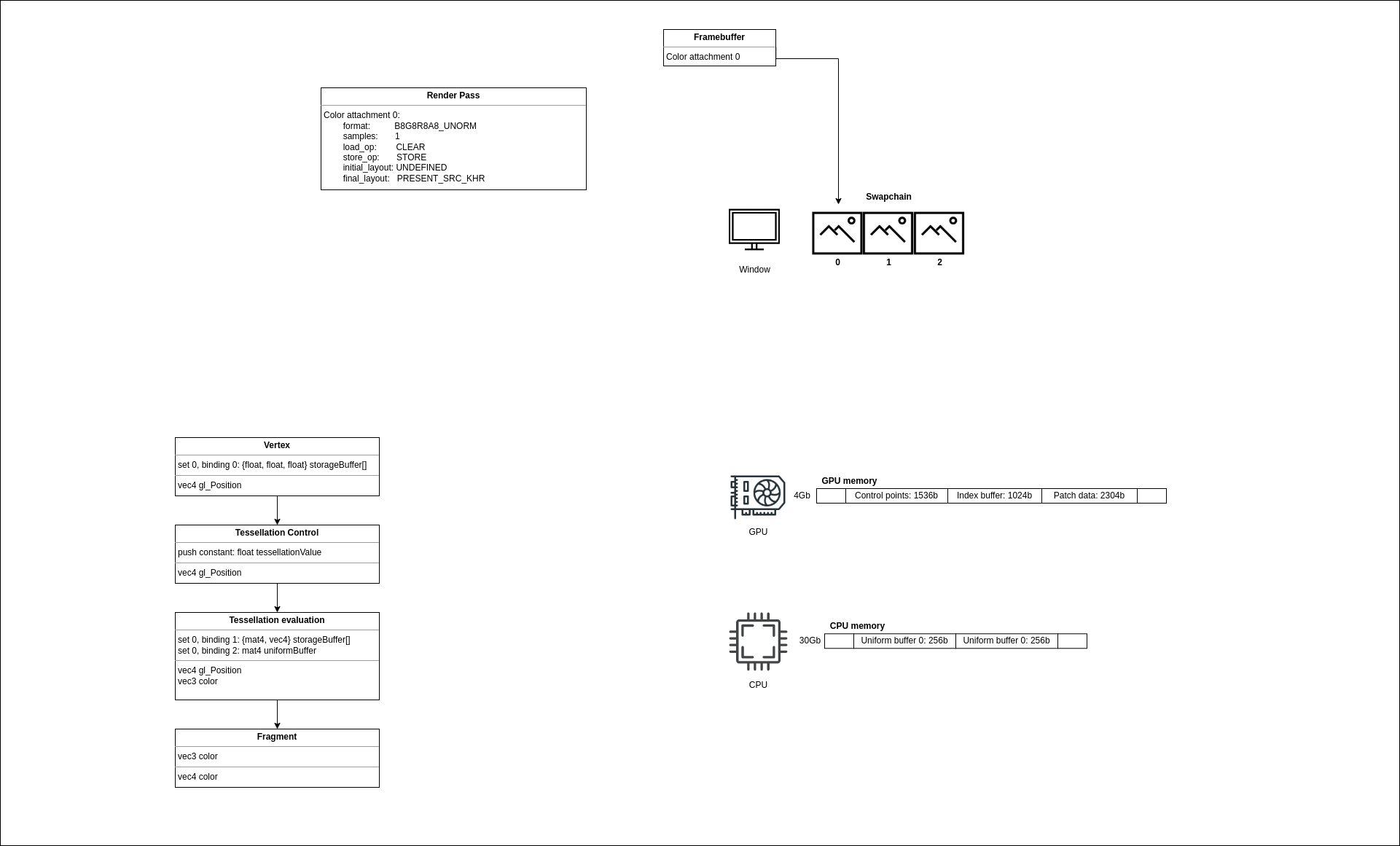

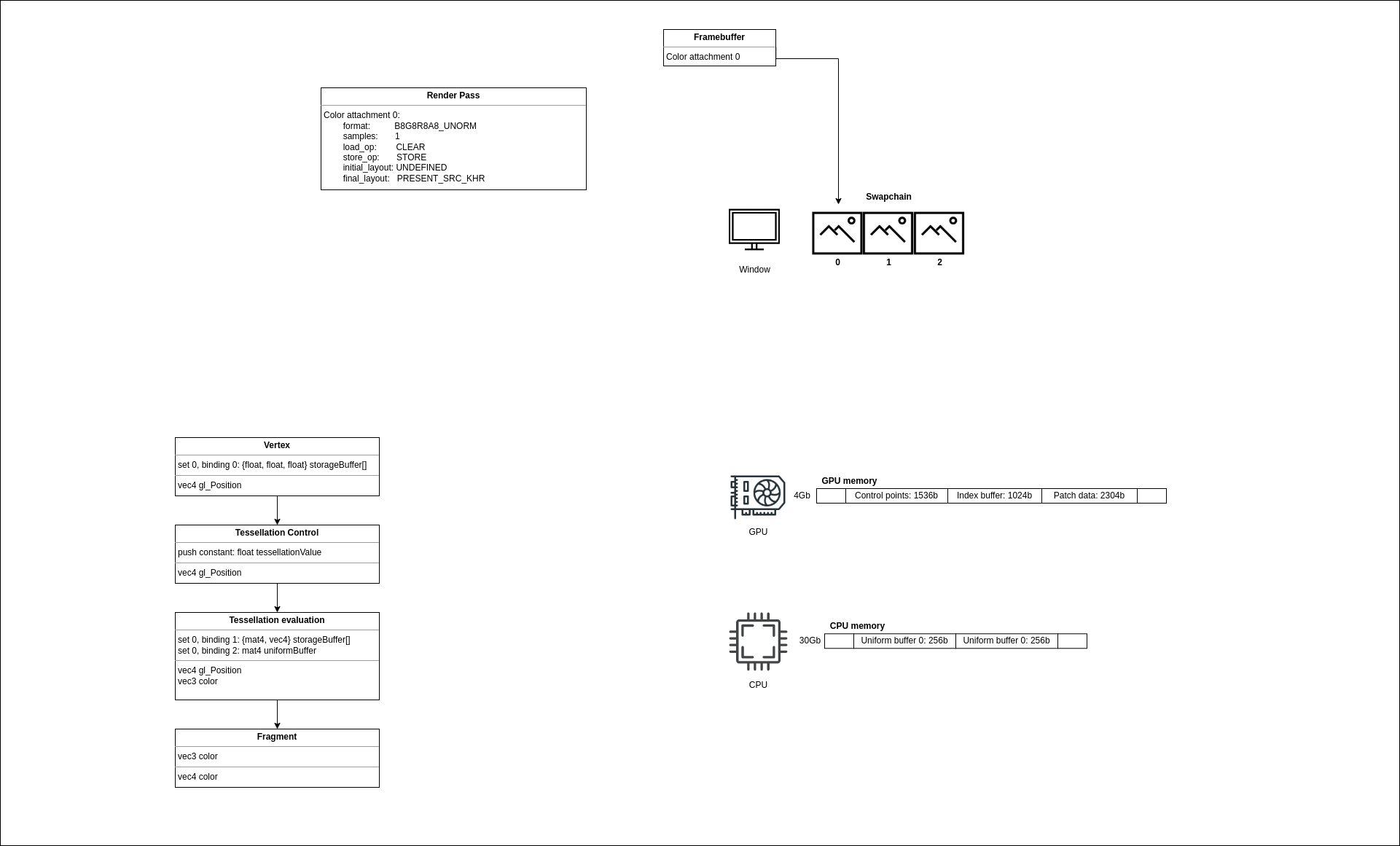

Let’s look at the diagram:

We can see a render pass from the previous step - it’s still not connected to anything, it just describes what types of images we plan to use when we draw. Also, we see 3 swapchain images and one of the framebuffers pointing to an image.

What next

Today we created the actual surface to draw into. We have all the resources uploaded to the GPU and waiting to be used. Can we finally draw? Yes! In the next step, the empty-window seal will be broken, and we will finally do what we came here for.

The source code for this step is here.

You can subscribe to my Twitter account to be notified when the new post is out or for comments and suggestions. If you found a bug, please raise an issue. If you have a question, you can start a discussion here.